| Fangshuo Liao | Wenyi Su | Anastasios Kyrillidis |

| Computer Science Department, Rice University and Ken Kennedy Institute (K2I) |

| Code [GitHub] | Paper [arXiv] |

Principal Component Analysis (PCA) is a fundamental tool in machine learning and data analysis. We propose a distributed PCA framework that enables multiple workers to compute distinct eigenvectors simultaneously using a novel Parallel Deflation Algorithm. Our method allows for asynchronous updates while maintaining provable convergence, addressing a key theoretical gap in model-parallel distributed PCA. We demonstrate that our approach achieves comparable performance to EigenGame-\(\mu\), the state-of-the-art PCA solver, while providing stronger theoretical guarantees.

PCA is widely used in dimensionality reduction and feature extraction. Traditional centralized approaches struggle with scalability in large datasets, necessitating distributed methods. Model-parallel PCA algorithms, such as EigenGame, have demonstrated success in distributing computations across multiple workers, but they rely on strict sequential dependencies.

We introduce Parallel Deflation, a model-parallel PCA approach that breaks the strict dependencies of previous methods, allowing multiple workers to refine their eigenvector estimates asynchronously. Our theoretical analysis provides provable convergence guarantees, a missing piece in prior model-parallel approaches.

Several approaches have been explored for distributed PCA:

Our work advances model-parallel PCA by removing strict sequential dependencies and providing provable convergence results.

We focus on computing the top-\(K\) eigenvectors of an empirical covariance matrix \(\Sigma\). Given a dataset \(Y\) with \(n\) samples and \(d\) features, the covariance matrix is given by: \(\Sigma = Y^\top Y\). The goal is to find the top-\(K\) eigenvectors of \(\Sigma\) efficiently in a distributed setting, without requiring strict sequential dependencies.

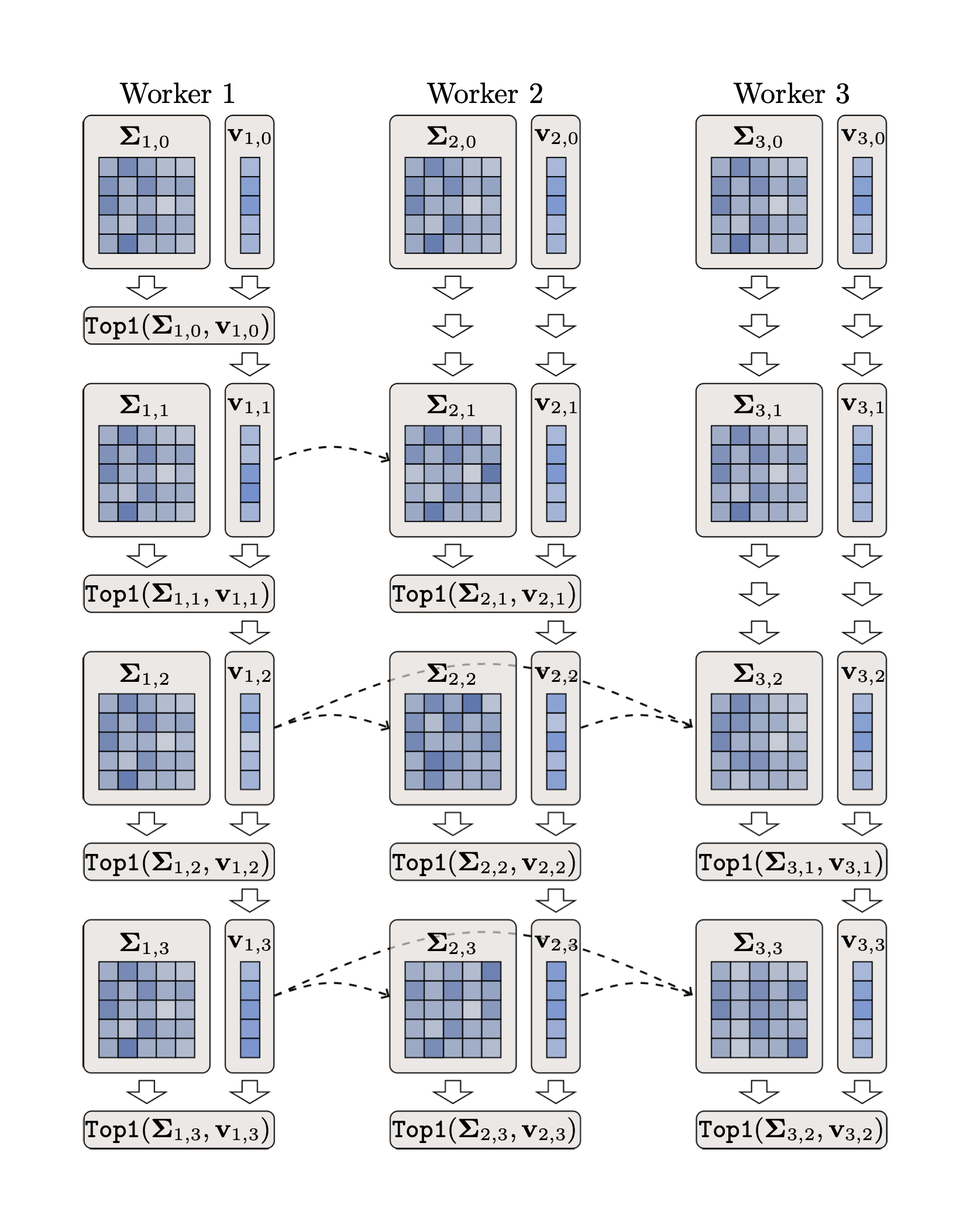

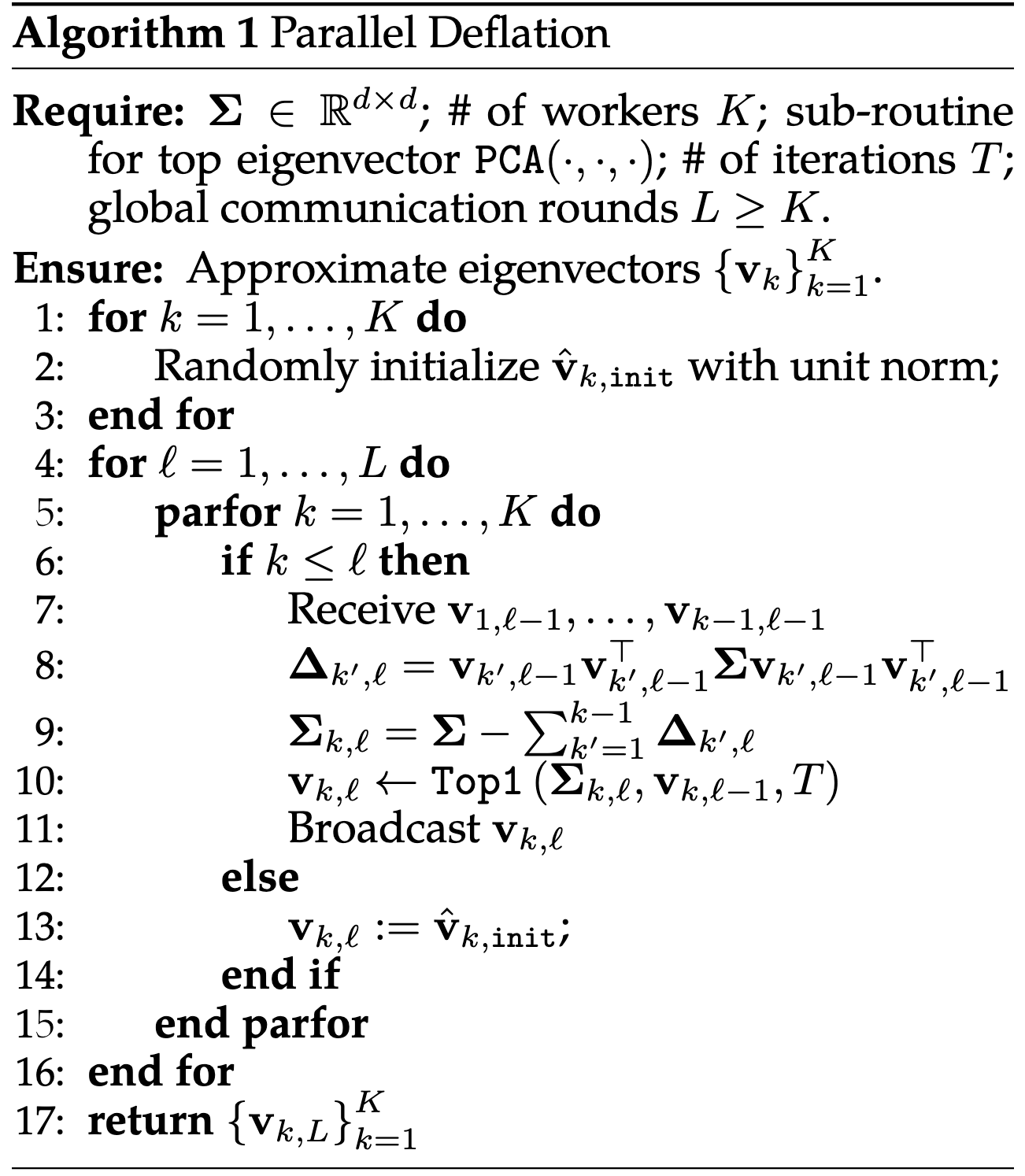

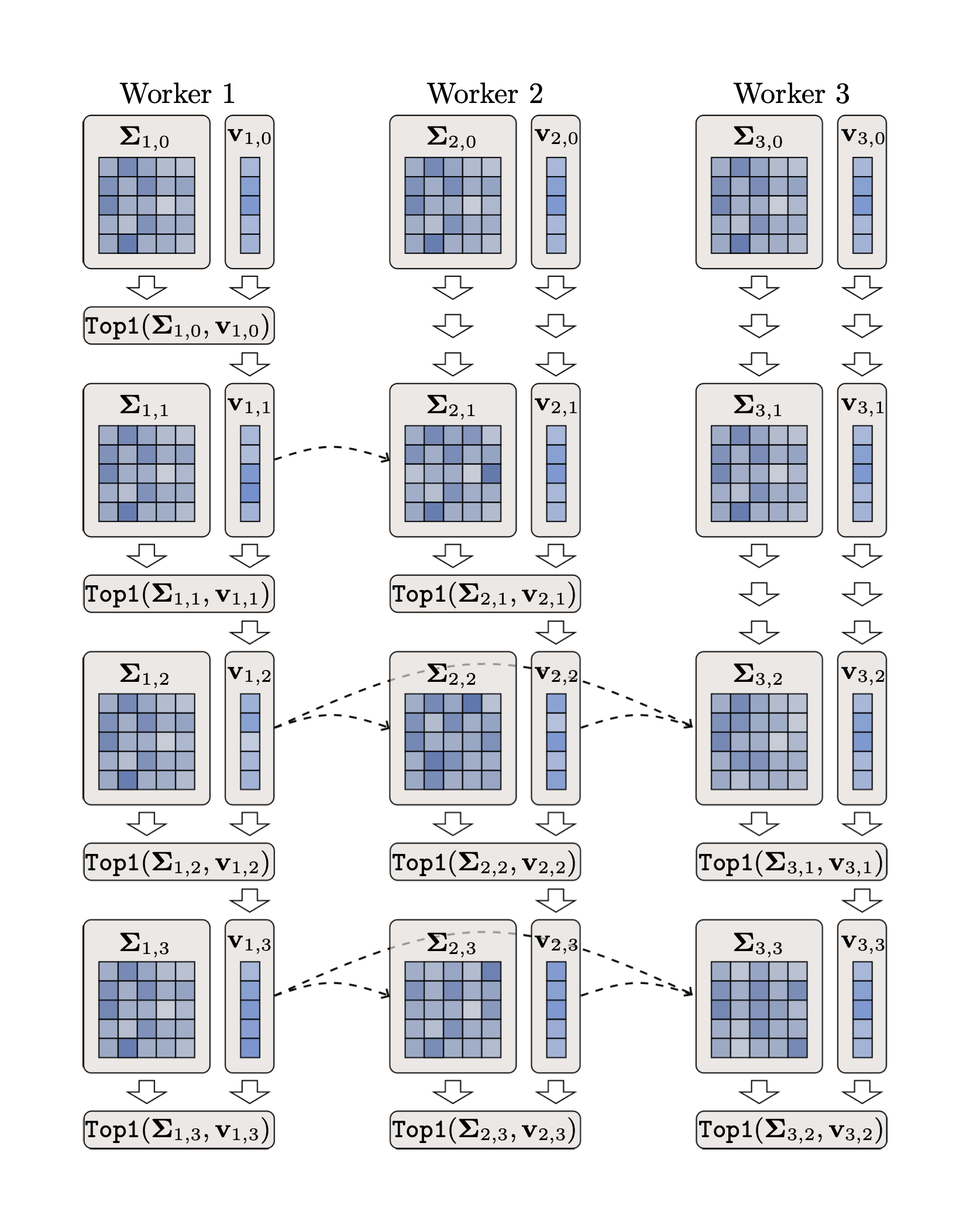

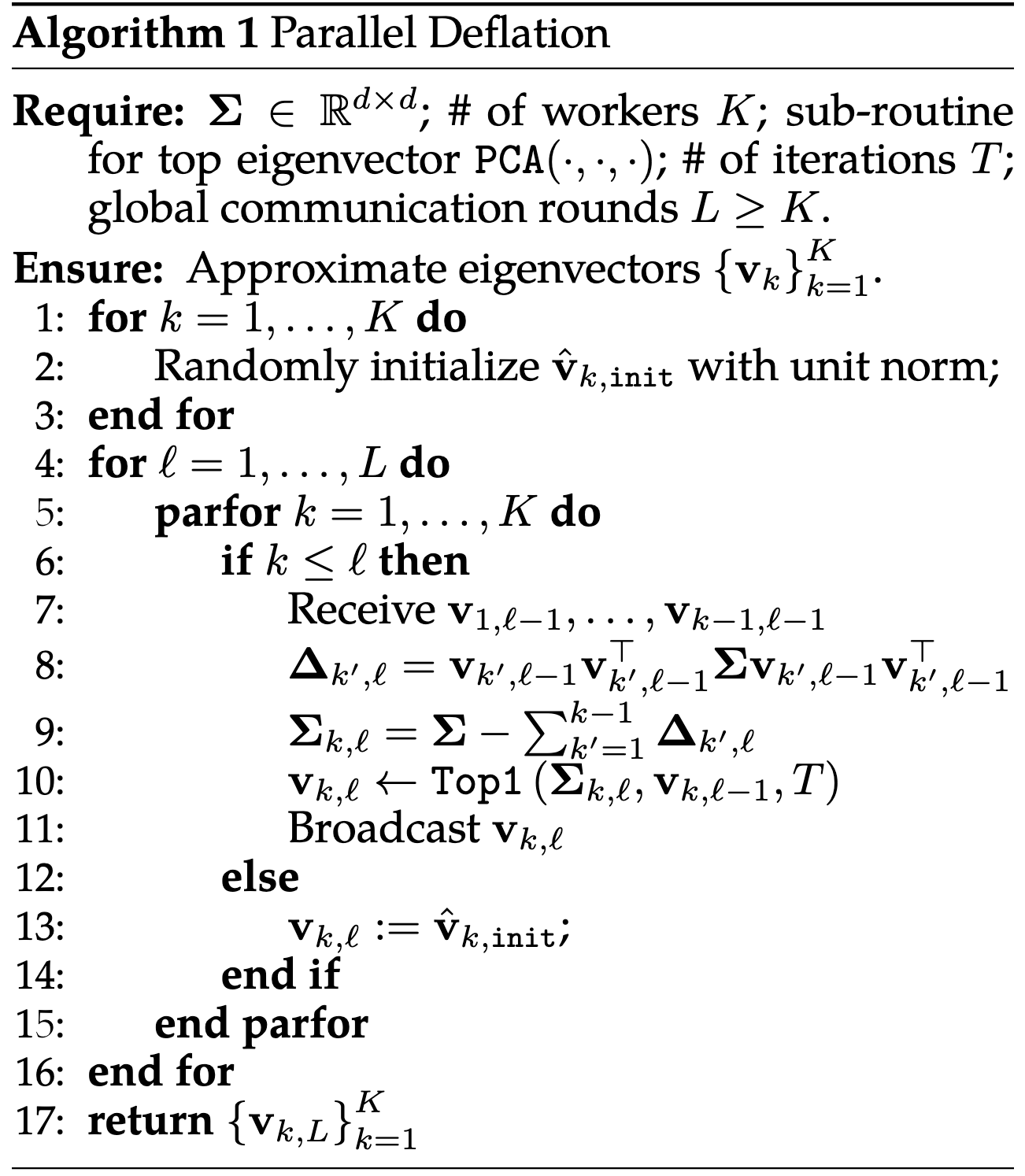

Our algorithm distributes the computation of \(K\) principal components across \(K\) workers. Unlike traditional deflation methods that require sequential eigenvector computation, we introduce a parallel iterative approach:

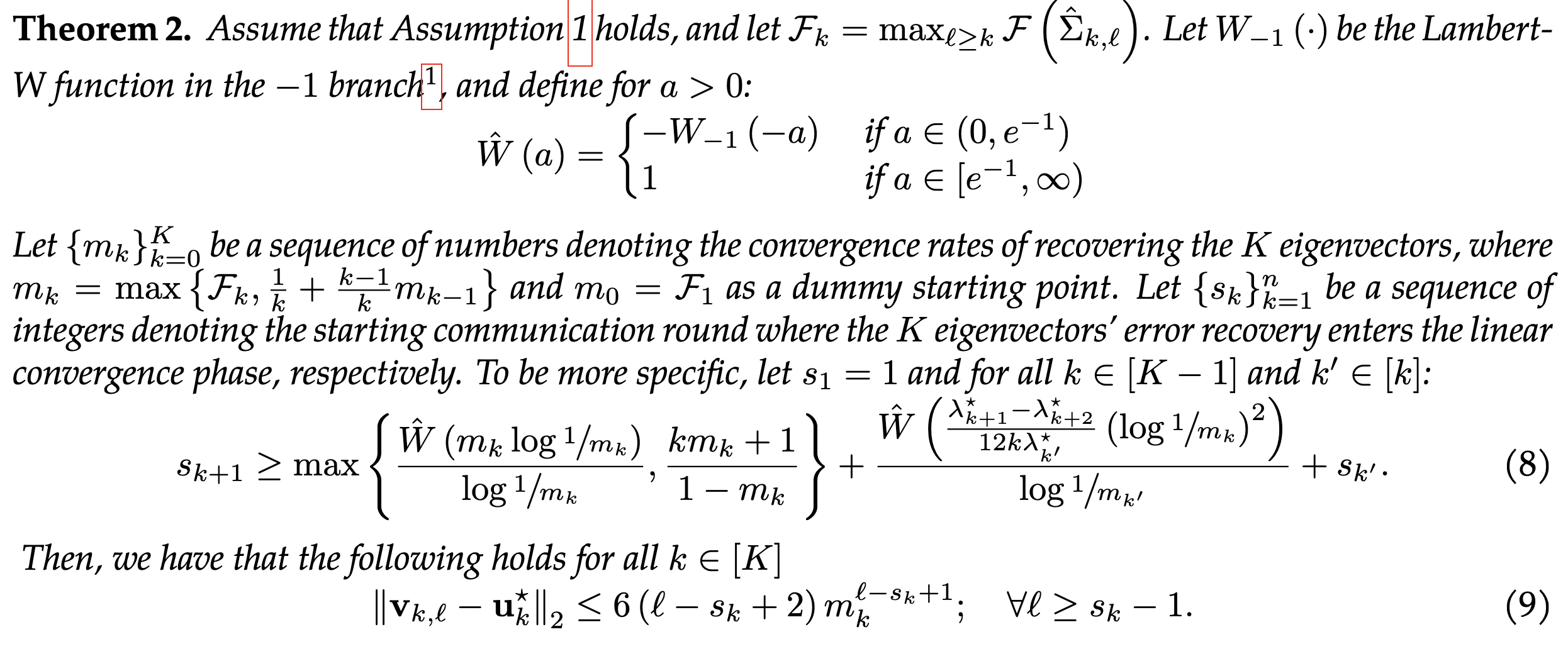

We provide theoretical guarantees for the convergence of the Parallel Deflation Algorithm. Our analysis shows that:

These results establish a strong theoretical foundation for model-parallel PCA, addressing a key gap in prior work.

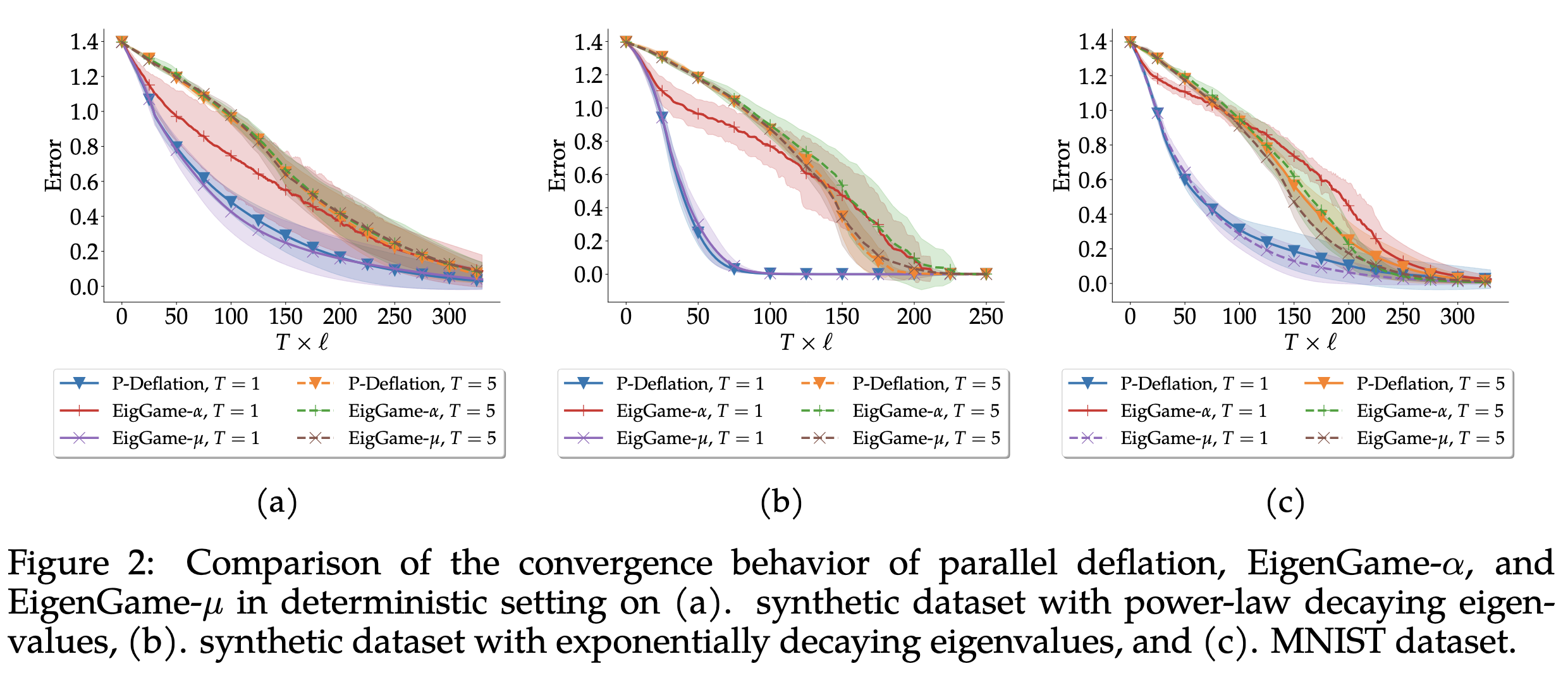

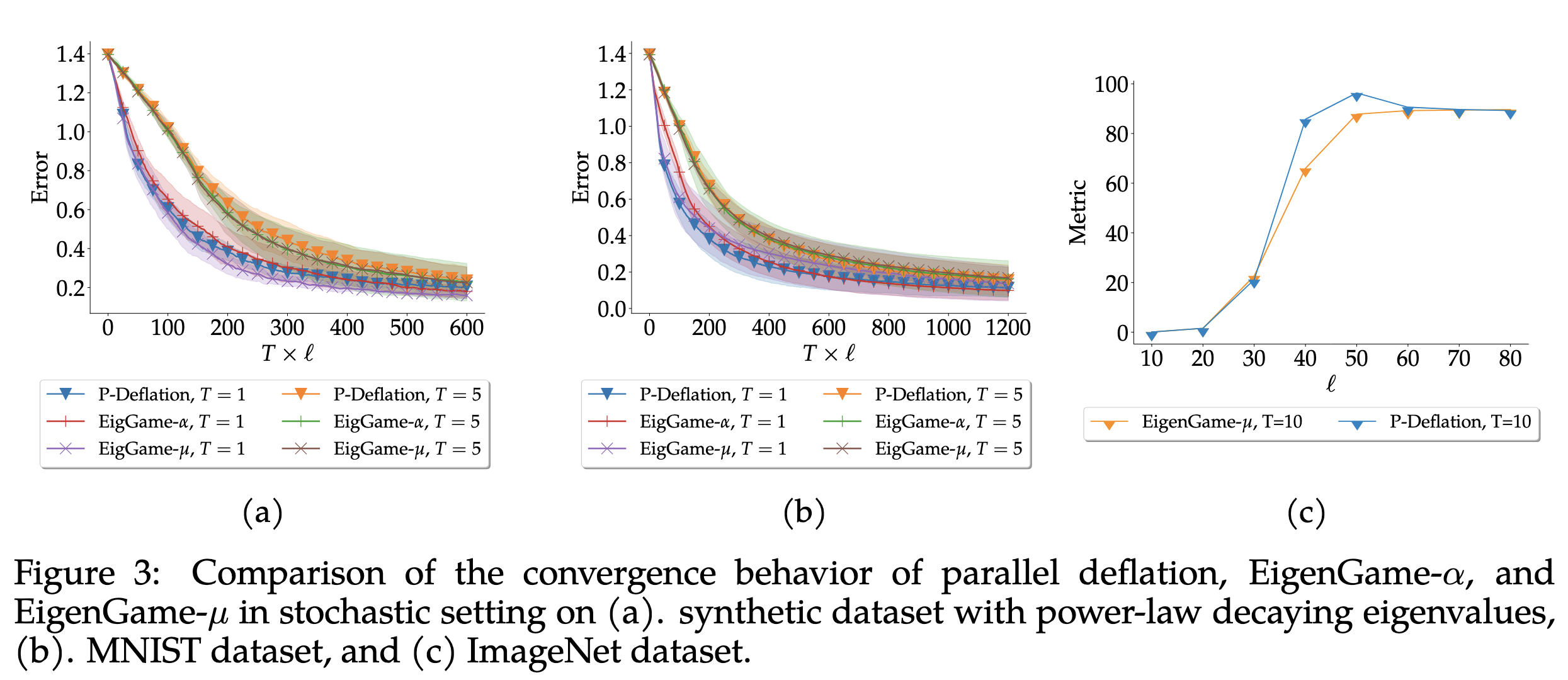

We compare Parallel Deflation against EigenGame-\(\alpha\) and EigenGame-\(\mu\) on synthetic datasets and real-world benchmarks such as MNIST and ImageNet.

We present Parallel Deflation, a novel model-parallel PCA framework that removes sequential dependencies and provides provable convergence guarantees. Future work includes:

Supported by the Ken Kennedy Institute at Rice University.